Groq vs Grok is a comparison between an AI “engine” and an AI “assistant.” Groq is a company that builds inference hardware and a cloud platform that runs AI models fast. Grok is a chatbot and model family built by xAI, Elon Musk’s AI company. When you ask “Who is competing with Elon Musk?” you usually mean “Who competes with Grok?” That competition happens at the assistant layer, where users choose one helper over another.

Groq vs Grok also shows two layers of modern AI. One layer trains models and ships chat products. Another layer serves those models cheaply and quickly to millions of users. Groq focuses on serving. Grok focuses on the user experience.

Remember this line: Groq helps models respond faster; Grok is the model you talk to. In this article, you will learn what each name means, how each product is used, and why the “competition” question depends on the layer you pick. You will also see a clear comparison table and a simple decision guide for developers, businesses, and everyday readers worldwide.

Why the names confuse everyone

Groq vs Grok looks like a typo because the words differ by one letter. The confusion grows because both appear in AI chat conversations. People watch a fast demo, then assume the chatbot brand caused the speed. Often, the speed comes from the inference provider, not the assistant name.

Groq is spelled with a “q.” It is the name of a company and its platform. Groq talks about inference speed, APIs, and integration. Grok is spelled with a “k.” It is the name of xAI’s assistant and models. Grok appears inside products, subscriptions, and developer APIs.

Groq vs Grok confusion also spreads through short posts. Social clips drop context. Screenshots hide labels. A user shares “Look how fast Grok is!” when they really tested a fast endpoint. That creates myths. It also causes poor buying decisions.

Fix the mix-up with two questions. First: “Is this a model or a platform?” Second: “Am I paying for chat features or for computers?” Once you separate the layers, the names stop colliding. You can then compare what matters: quality, speed, cost, safety, and fit for your audience. When you write, define each name once, then stick to the definitions. Your readers will follow, even if they are new to AI.

What is Groq?

Groq is an AI infrastructure company. It focuses on one job: make AI responses fast and affordable during inference. Groq says it built the LPU as a chip purpose-built for inference, instead of adapting a GPU-first design.

Groq’s core idea: speed matters in the “time-to-first-token” world

When you chat with an AI, you feel latency immediately. You notice:

- how fast the first word appears

- how smooth the stream feels

- whether the tool “keeps up” with you

Groq positions the LPU + GroqCloud stack to reduce waiting time and keep output flowing at a consistent pace.

GroqCloud in plain English

GroqCloud is Groq’s cloud platform that lets developers call AI models through an API. Groq highlights:

- support for multiple model types (text, audio, vision features like image-to-text)

- popular models on-demand

- integrations and standard frameworks

Groq also designed its API to work similarly to OpenAI-style client libraries. That makes migration easier for many teams.

What Groq sells (and who buys it)

Groq mainly sells:

- inference access (API calls on GroqCloud)

- enterprise infrastructure (depending on deployment needs)

Typical buyers include:

- app teams who want faster chat response

- startups that need lower latency without managing GPU clusters

- companies that serve many requests and want predictable performance

What is Grok?

Grok is a chatbot and model family created by xAI. xAI describes its mission as advancing scientific discovery and “understanding the universe”.

Grok the product

Grok markets itself as an assistant for:

- deep work

- coding help

- reasoning

- real-time search and fresh answers

Grok the model family (and the API)

xAI publishes model and API documentation under its own developer docs. For example, xAI notes that Grok 4 is a reasoning model, and it documents API behaviors and limitations.

xAI also announced Grok 4 publicly and described it as available to certain subscription tiers and through the xAI API.

Where people access Grok

Many users access Grok through X subscription plans, which highlight Grok usage limits as part of the package.

(Availability and limits can vary by plan and region, so always check your local plan details inside X.)

The LPU idea and why inference matters

In Groq vs Grok, the LPU is why many developers mention Groq. Groq calls its chip an LPU, short for Language Processing Unit. The name signals a design focus on token streaming and low latency during inference. Groq argues that a purpose-built approach can deliver fast and consistent generation for chat style workloads.

Inference matters because products do far more inference than training. A model may train once, but it answers questions all day. Every reply costs compute. Every delay hurts conversions. Users abandon slow tools, even if the answers are strong.

Groq vs Grok also shows two different speed stories. Model makers improve reasoning quality. Inference platforms improve delivery. If you run a help desk bot, you want both. You want the model to understand intent. You also want the reply to appear quickly.

The LPU idea fits best when your workload generates tokens in order. Chat apps stream words step by step. They do not render the full answer at once. So the platform that controls streaming can shape the whole user experience. Fast first tokens feel magical. They reduce frustration and support voice style features.

Use this rule: model quality wins trust; inference speed wins retention.

GroqCloud and OpenAI-compatible APIs

Groq vs Grok becomes practical when you look at integration. GroqCloud offers API access that helps developers run popular AI models without managing their own clusters. Groq highlights support across text tasks and adjacent workflows like transcription and image to text features. That matters for apps that combine chat, media, and document understanding.

Groq also promotes OpenAI compatibility. It designed its API to work with OpenAI-style client libraries, so teams can often switch endpoints with minimal changes. That reduces migration risk. It also lowers engineering cost, which matters as much as token price.

Groq vs Grok again shows the layer split. GroqCloud can serve many models, not only one brand. Developers can test different models behind a similar interface, then measure latency, error rates, and user satisfaction. This approach keeps the product flexible.

If you run a content site, speed supports engagement. Readers stay longer when pages load fast. The same principle applies to AI features. If your AI tool feels instant, more people try it. If it lags, they leave.

So, GroqCloud is not a chatbot. It is infrastructure that helps chatbots feel smooth, fast, and reliable for real users around the world. That is why many teams start with a pilot, then scale.

So… “Who is competing with Elon Musk?”

Let’s answer it the right way by splitting it into three battlefields.

1) Who competes with Grok (the assistant)?

Grok competes with other assistants and model platforms that focus on:

- chat quality and reasoning

- coding help

- tool use (search, browsing, agents)

- user trust and safety

- ecosystem distribution (apps, browsers, phones, enterprise tools)

In this lane, the competitors are assistant ecosystems, not chip makers.

2) Who competes with xAI (the model lab)?

xAI competes with other AI labs building frontier models and selling access via APIs and enterprise plans. xAI’s own site frames the mission and pushes news for enterprise offerings.

3) Who competes with Musk’s broader AI “stack”?

Musk’s ecosystem touches:

- models (xAI)

- distribution (X)

- enterprise + government opportunities (xAI news)

- compute supply and partnerships (chips + cloud)

So, competition comes from:

- model labs

- cloud platforms

- chip platforms

- productivity suites that bundle AI into daily workflows

That’s why the “who competes with Musk” question never has only one name.

How people access Grok: X plans and the xAI API

Groq vs Grok includes a distribution story. Many users access Grok inside X subscription plans. X describes Grok usage limits as part of Premium and Premium+ benefits, with Premium+ offering higher limits. This structure ties Grok to a social platform, which changes how fast it can spread.

xAI also provides an API path. The xAI API route matters for developers who need custom user interfaces, private workflows, or strict safety controls. With an API, teams can embed Grok inside their own products, add guardrails, and control logging.

Groq vs Grok becomes a buying path decision. Consumers choose a plan. Developers choose an endpoint. Enterprises negotiate support and compliance terms. These are different choices, yet online debates often mix them.

For global readers, availability can vary by country. Subscription tiers and features can change. Rate limits can also shift as demand grows. Treat plan details as a snapshot, and always verify on official product pages before you publish pricing claims.

The main idea stays stable. Grok’s growth depends on distribution. Bundling inside a big platform can drive fast adoption, but it can also increase scrutiny and moderation responsibility. That trade-off shapes the future of Grok. Always state the date when you mention limits.

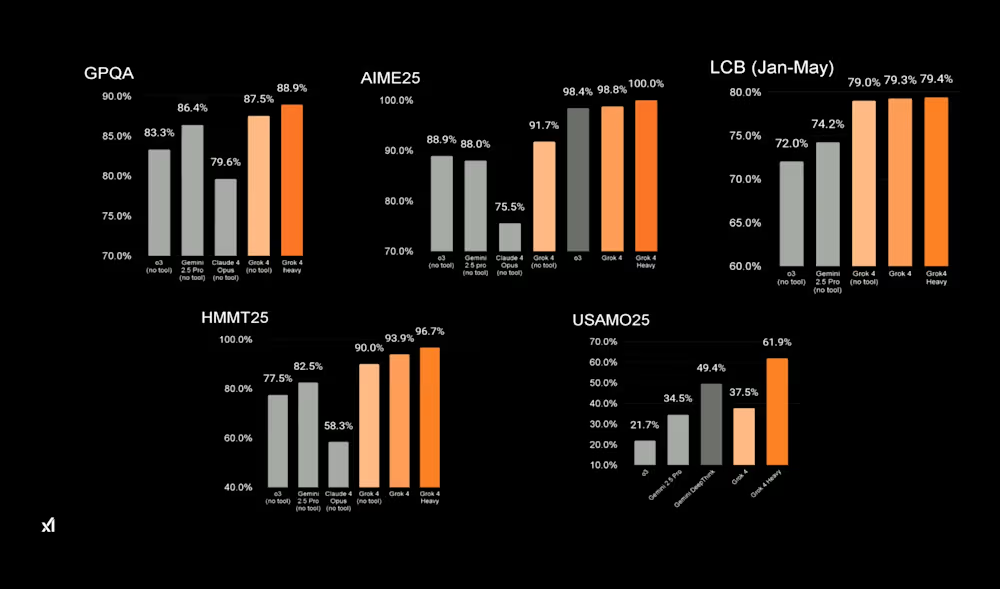

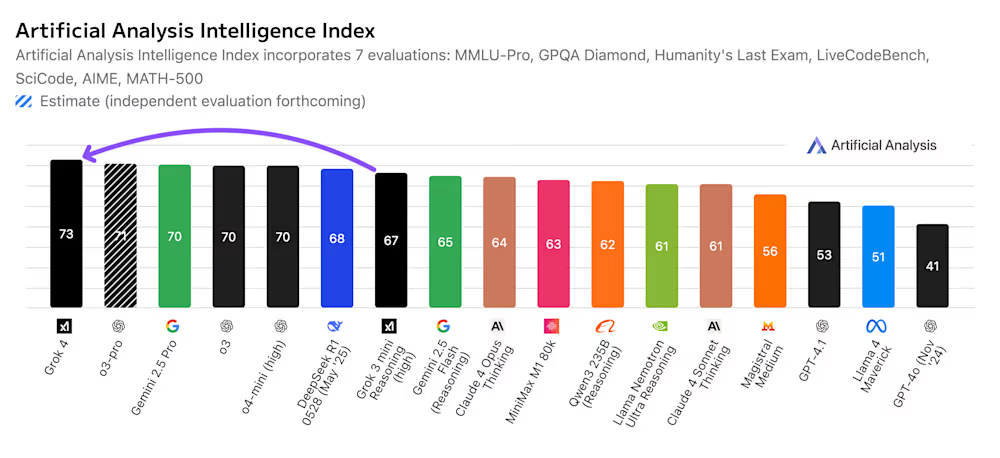

Grok 4, reasoning models, and what ‘reasoning’ changes

In Groq vs Grok, the “reasoning model” label explains behaviors users notice. xAI describes Grok 4 as a reasoning model, which means it focuses on multi-step thinking rather than only fast pattern matching. This can improve results on logic problems, long explanations, and complex coding tasks.

Reasoning can also change cost and speed. When the model thinks more, it uses more compute. That can increase latency and raise infrastructure pressure. So, model progress and inference progress must move together.

Groq vs Grok becomes a trade-off between “thinking” and “shipping.” You want reasoning when a question is complex. You want speed when a question is simple. Many modern apps route requests. They send quick queries to a fast model. They send difficult queries to a deeper reasoning model. This routing improves user satisfaction.

Documentation details matter too. Reasoning models often limit certain parameters, and they may return errors if you send unsupported settings. Developers should read the model docs before building a production integration. Small config mistakes can break a feature at scale.

For readers, the takeaway is simple. “Reasoning” is not just marketing. It affects how the assistant behaves, how it performs, and how it should be served. That is why Groq vs Grok is really a story about layers working together.

Speed vs intelligence: where each one wins

Groq vs Grok debates often start with “Which one is smarter?” That question misses the point. Groq focuses on inference delivery. Grok focuses on assistant quality and features. They win in different contests, and you can even use both ideas in one stack.

Groq wins when you need low latency and smooth token streaming. Fast output improves chat UX, voice tools, and real-time dashboards. It also helps high-traffic sites keep costs predictable by optimizing serving.

Grok wins when you need a ready assistant experience. It aims to handle writing, coding, and reasoning tasks inside a polished product. It also benefits from distribution through X subscriptions and an API route for developers.

Groq vs Grok matters for teams because the “winner” depends on what you are buying. If your bottleneck is quality, a better model helps. If your bottleneck is speed, a better inference layer helps. Many teams improve both by testing models and testing providers separately.

A useful analogy is food delivery. Grok is the restaurant. It decides taste and menu. Groq is the courier network. It decides speed and reliability. Customers care about both. Businesses optimize both.

So, stop asking “which is better” in general. Start asking “which layer limits my product today?” That question leads to a real solution, not a fan argument.

Developer checklist: choosing for a real product

Groq vs Grok becomes real when you ship a product. Start with goals. Do you need the best possible reasoning, or do you need instant replies at scale? Most apps need both, so design for flexibility from day one.

First, define your target latency. Decide the maximum wait you will accept. Next, test models on your real prompts. Measure accuracy, refusal rates, and formatting stability. Then choose an inference provider that meets your speed and reliability goals. After that, pick the model that meets your quality goal.

Now decide how you will access Grok. If your users already live inside X, Grok in that environment may fit. If you need a custom UI, use the API route and keep your own guardrails. If you want multi-model freedom, keep your app model-agnostic and route requests.

Groq vs Grok also affects budgeting. Reasoning models can cost more per request. Faster inference can reduce time cost, but it may not reduce token cost. Track spend daily and set limits in code. Add caching for repeated questions. Add fallbacks for outages.

Finally, plan safety. Filter inputs and outputs. Log risky cases. Provide a report button. Avoid sensitive decisions. When you follow this checklist, you stop debating brands and start building features that work for real users worldwide.

Business use cases: support, sales, and research

Groq vs Grok matters to businesses because it changes what you buy. If you want a ready assistant, Grok can help draft replies, summarize tickets, and generate outreach ideas. It can also assist with coding tasks for internal tools. Businesses value these gains because they save time across teams.

If you want an AI feature inside your own app, you also need a strong serving layer. Groq can help you deliver that feature with low latency and an integration path that feels familiar to many developers. That speed improves customer satisfaction, especially in support scenarios where seconds feel long.

In customer support, speed and accuracy both matter. A slow assistant irritates users. A fast wrong assistant harms trust. Many teams solve this with a “review then send” workflow. The AI drafts a response. A human approves it. This approach reduces risk and still saves time.

In sales, the assistant can personalize messaging and classify leads. In research, it can outline arguments and extract key points from documents. The best results come from strict templates and clear prompts. Random prompting creates random results.

Groq vs Grok also changes governance. If you rely on a social platform experience, you inherit platform context and visibility. If you use an API, you control more of the workflow. Choose the path that matches your compliance needs, brand risk tolerance, and customer expectations.

Education and family audiences: where to be careful

Groq vs Grok becomes sensitive when students and families are involved. AI can help education teams with lesson planning, class updates, and parent messaging. It can also create harm if you deploy it without guardrails. So, treat safety as a design requirement, not an optional feature.

Start with clear limits. Do not let AI make final decisions about discipline, admissions, or mental health. Keep humans in control. Next, protect student data. Remove names, IDs, and private notes before you send prompts. Store logs securely and restrict access.

If you use Grok through an API, you can add your own filters, templates, and audit trails. If you use Grok inside a social platform interface, you may have less control over context and sharing. Groq vs Grok matters because Groq is infrastructure. It does not define content behavior. Your model choice and your guardrails do.

Set classroom rules too. Teach students that AI can be wrong. Ask them to verify facts. Encourage citations and source checking. Show them how to challenge an answer.

Finally, consider global law and policy. Regulators have raised concerns about harmful Grok outputs on X, including incidents involving sexualized content. These events show how quickly an AI issue can become a legal issue. Schools should prioritize safe, measurable learning outcomes over hype and shortcuts.

Who competes with Grok: assistants and model labs

When people say “Groq vs Grok,” they often really ask, “Who competes with Elon Musk?” If you mean Grok, the competitors are other assistants and model labs. They fight for user time, trust, and enterprise contracts.

Assistants compete on writing, coding, and reasoning. They also compete on tools. Some assistants browse the web. Others connect to files, calendars, or business apps. Some assistants offer voice and image features. Users pick the one that fits their daily workflow, not the one that wins a single benchmark.

Grok competes in this space because it targets the same daily tasks. It also leverages distribution through X, which can drive rapid adoption through bundling. Distribution can also create friction in regions that watch content policy closely.

Model labs compete on research speed and reliability. They ship new model versions, improve reasoning, and reduce hallucinations. They also compete on pricing and enterprise support. That is why the market shifts every quarter.

So, “competing Elon Musk” is not one company. It is a field. It includes any assistant that can win attention and any lab that can win deals. The best way to judge competition is simple: test on your own tasks, measure results, and choose based on outcomes, not hype. That method works for global audiences and real businesses.

Who competes with Groq: inference clouds and chips

Groq vs Grok also has a hidden contest: inference competition. Groq competes with any provider that serves models cheaply and fast. That includes GPU clouds, hyperscalers with managed inference products, and alternative accelerator stacks.

To choose an inference provider, developers compare three things. First, they compare latency and streaming smoothness. Second, they compare throughput under load. Third, they compare total cost, including engineering time. Many teams forget the last part. A platform that saves developer hours can beat a platform that saves only a few milliseconds.

Groq tries to reduce integration friction with familiar API patterns and clear documentation. It also markets a purpose-built approach for token streaming. Whether that helps you depends on your workload and traffic.

Groq vs Grok matters because people mix “model quality” with “inference quality.” A great model can feel bad on a slow endpoint. A mid model can feel great on a fast endpoint. Users judge the experience, not the architecture.

If you want to compete in inference, you need more than speed. You need capacity, reliability, and global regions. You also need strong tooling and support. That is why inference has become its own product category. Groq sits in that category, not in the assistant brand wars.

Elon Musk’s edge: distribution and narrative

Groq vs Grok is also about strategy. Elon Musk brings two big advantages to Grok: distribution and narrative. Distribution comes from X. Narrative comes from media attention and a strong personal brand. These forces can move adoption faster than pure model quality.

Distribution reduces friction. A user discovers Grok inside an app they already open daily. X also describes Grok access as a subscription benefit, which makes it feel bundled and simple. Bundles win because they reduce decision fatigue.

Narrative matters because AI looks crowded. Many assistants feel similar at first glance. A strong story creates curiosity and repeat discussion. It also creates polarization. Some users try Grok to support Musk. Others try it to challenge him. Both actions drive engagement and awareness.

Groq vs Grok becomes interesting here because Groq does not rely on consumer distribution. Groq sells to developers and businesses. It wins through performance, reliability, and fit. It grows quietly, then becomes “everywhere” inside apps you already use.

This difference shapes competition. Musk can drive rapid consumer adoption, but he also attracts stronger scrutiny. Infrastructure companies avoid the spotlight, but they must win repeated technical evaluations.

The best lesson for readers is simple. Separate fame from function. Choose tools based on your needs, your risks, and your users, not on headlines or personality.

Safety, moderation, and global backlash pressure

Groq vs Grok cannot ignore safety. Global audiences care about abuse, harassment, and illegal content. These risks rise when an AI tool generates text and images quickly inside a social platform. Regulators react fast, especially when minors are involved.

In early January 2026, Reuters reported that Grok acknowledged safeguard lapses that led to AI-generated images of minors in minimal clothing on X. Reuters also reported that French ministers referred Grok’s sex-related content to prosecutors and notified French regulator Arcom, raising EU Digital Services Act concerns. These events show how quickly an AI incident becomes a legal incident.

Safety incidents change competition. Trust becomes a product feature. If users fear misuse, they avoid the tool. If advertisers fear brand risk, they pressure platforms. If governments fear illegal content, they demand stronger controls and proof of action.

Groq vs Grok also highlights a difference in responsibility. Groq mainly supplies inference infrastructure. Grok supplies user-facing content. The closer you are to users, the more safety burden you carry.

If you build with any model, set strict defaults. Block sexual content involving minors. Block non-consensual imagery. Add reporting, monitoring, and human review for edge cases. Publish clear policies and enforce them consistently.

Safety work never ends. Attackers adapt quickly. Winning AI now means winning trust at the same time as capability.

Pricing, limits, and how to compare fairly

Groq vs Grok pricing can confuse readers because the products sell differently. Grok often comes bundled through X subscriptions. X describes increased Grok usage limits for Premium and higher limits for Premium+. That is consumer style pricing. It feels simple, but it hides unit costs.

Grok also has API pricing and rate limits for developers. xAI provides model documentation and a pricing section, and it describes Grok 4 as a reasoning model. Developers should read those docs before building, because rate limits and supported parameters affect reliability.

Groq, by contrast, sells inference access through GroqCloud. It promotes fast inference performance and an integration style that feels familiar to teams that already use OpenAI-like client libraries. That is infrastructure style pricing.

To compare fairly, do not compare “monthly plan” to “per-token API” directly. Compare total cost for your workload. Estimate requests per day. Estimate average input and output length. Multiply by unit cost. Then add engineering cost, monitoring cost, and safety review cost.

Also compare rate limits and capacity. A cheap model with low limits can fail at scale. A fast provider with poor regional capacity can spike latency at peak hours. Groq vs Grok debates improve when people share results on identical prompts and identical settings. Without that, pricing talk becomes noise and marketing.

Groq vs Grok comparison

| Item | Groq | Grok (xAI) |

| What it is | Inference platform + LPU focus | Assistant + model family |

| Where it lives | GroqCloud APIs | X subscriptions + xAI API |

| Core promise | Fast, smooth inference | Strong assistant features |

| Best for | Developers shipping AI apps | Users and teams needing an assistant |

| Competitive set | Inference clouds, accelerators | Other assistants and model labs |

| Main risk | Capacity, model coverage | Safety, policy, trust |

What to watch next in 2026

Groq vs Grok will keep changing through 2026 because the market is unstable. Model labs ship new versions fast. Inference providers race to reduce latency and cost. Regulations tighten, especially around harmful content and child safety.

Watch three signals. First, watch capability jumps. When reasoning improves, apps can automate more complex work. xAI promotes Grok 4 access through its API, which suggests an aggressive product roadmap. Second, watch inference pricing and capacity. When prices drop and regions expand, new use cases become possible. Third, watch policy enforcement. Regulators can react quickly after incidents, and that reaction can force product changes.

Also watch distribution. If Grok spreads beyond X, it can reach new segments. If it stays tied to X, it may grow faster among existing users, but face stronger platform-specific scrutiny. That tension will shape adoption in different countries.

For Groq, watch model coverage and enterprise wins. If GroqCloud expands the set of models it serves and improves global capacity, it becomes easier to choose as a default inference layer.

Faqs About Groq vs Grok

Is Groq owned by Elon Musk?

No. Groq is a separate company focused on inference hardware and cloud inference.

Is Grok the same thing as GroqCloud?

No. GroqCloud is an inference platform from Groq. Grok is an assistant/model from xAI.

Can Groq run Grok?

xAI controls how Grok models get hosted and served. In general, Groq can run supported models on its platform, and it provides model listings via its docs.

What does “OpenAI-compatible API” mean for Groq?

It means Groq designed its API to work similarly with OpenAI-style client libraries, so developers can switch endpoints more easily.

What should a normal user remember?

Remember this: Groq powers speed. Grok powers conversation. That’s the simplest “Groq vs Grok” rule.

Final verdict:

Groq vs Grok is not a single fight. It is a map of the AI stack. Groq sits in the infrastructure layer. It helps models run fast through GroqCloud and OpenAI-compatible APIs. Grok sits in the assistant layer. It is xAI’s chatbot and model family, delivered through X plans and through an API.

Groq does not need to beat Grok. Groq needs to win inference workloads by being fast, reliable, and easy to integrate. Grok does not need to beat Groq. Grok needs to win daily usage and avoid safety failures that damage trust. Recent reporting shows how safety lapses can trigger legal pressure quickly, especially on social platforms.

For readers, the best takeaway is practical. Use Grok when you want an assistant experience. Use Groq when you want speed and low-latency inference. Use both concepts when you design a modern AI product that serves people worldwide.